I thought I’d share some of my experience writing Polyominoes, since it has been interesting and challenging to me, and I thought it might be interesting to others. This post is primarily aimed at technical people who are curious about what is happening behind-the-scenes inside of Polyominoes as it plays against you. It might be helpful for players who want to understand the game’s strategy while playing against it, though. I cover the topic chronologically as I dealt with it during development. You can enjoy the discovery process or scoff 😜.

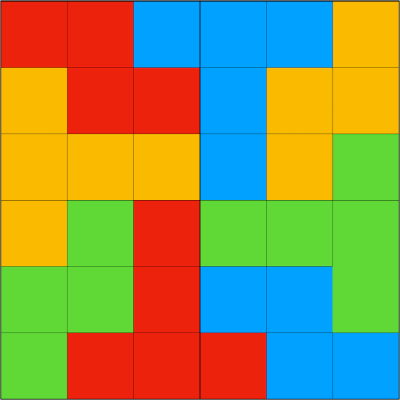

First, a bit of background on the game itself. Polyominoes is a board game app. You can play it alone, against the game, or in a multiplayer fashion against other people. Conceptually, there are four players, each with a rack of colored tiles. The board is made up of 20×20 squares, and each rack starts with 21 tiles of one color in various polyomino shapes. The typical “solo” game consists of you playing the red tiles while the game plays for the yellow, blue, and green players. The rules are simple. Each player has to place their first tile so that it overlaps a corner square of the board. After that, every tile played has to align with a corner of the same color tile, without touching any edges of the same color. Here’s a filled board to illustrate. Note how each tile only touches the corners of tiles of the same color. The player who covers the most squares on the board wins.

Having implemented the basic play/validation mechanics and underlying model for the Table, Board, Rack, and Tile, I needed to implement the logic for the game to play against you. I started out with something simple, which would then allow me to deal with the rest of the game play mechanics, scoring, logic for game outcome, and so on. For me, simple seemed like:

Find all the valid plays available on the board and rank them according to some criteria.

It’s not hard to find all the valid plays, but what criteria do I use? Don’t the criteria change as the game progresses? From my own experience, I know I want to play larger tiles first, and I know that control over the center of the board is often key to winning and maintaining a decent number of places to play as the board fills up. So to start with, I just implemented a few criteria to force play with larger tiles toward the center early in the game, and maximizing corners as the game progressed. This allowed me to deal with the game mechanics for solo play, but it wasn’t very competitive.

To Minimax or Not To Minimax?

The minimax algorithm is pretty much the primordial approach to game play. Although Polyominoes is really a four-player game, I naively thought that since the user is playing against the computer/game, I could think of it as a two-player game. This is wrong, but it didn’t stop me from implementing minimax and trying it out. It becomes quickly apparent that Polyominoes is a complex game – literally, in the formal sense of the term. For players, this complexity is part of the game’s appeal – so many possibilities of play to think about, with uncertain outcomes. As the programmer, though, the complexity means that the size of decision tree needed for minimax is ridiculously large – like atoms in the universe large. I could use some heuristics to choose a limited set of “good” moves as I constructed the tree, but even this is not practical in terms of limiting tree size.

One way to manage the complexity is to use the alpha-beta pruning technique. This approach lets you avoid constructing entire sections of the tree, and I also implemented it to try to understand how much it would help. The answer is: not nearly enough. Really the bottom line of minimax/alpha-beta and my exploration of it for Polyominoes is that the multi-player aspects of it make it impractical. It’s not enough to look a couple of moves ahead (“plies” in minimax terms) when with four players, you really need to look ahead 4x that number of moves. With the number of possible moves (even limited by making smart choices) being so large, the decision tree must be very large and time-consuming to construct to get useful results, or if artificially constrained in size, is not useful.

I needed something else.

Heuristics

Heuristics to me is just a fancy way to say “play smart”. In chess, this might involve evaluating the state of the game based on relative strength of pieces each player holds, the spatial layout, pawn positions, and so on. These are patterns you can encode in an algorithm, just like you mull them over as a player facing the board. They can change over time as the board develops. The same principles apply to Polyominoes.

The initial release of Polyominoes only used relatively crude heuristics to play against you. This consisted of ranking plays (a play being a tile in a certain orientation at a position on the board) based on a variety of factors. In the 1.1 release, I improved the ranking heuristics to include the following measures which typically vary between -1 and 1:

- Centerness – How close is the play to covering the center of the board?

- Offensiveness – How effective is the play in creating new places to play?

- Defensiveness – How effective is the play in destroying opponents’ places to play?

- Sizeness – How large is the tile?

- Openness – Will the play open up a region of the board I am blocked from?

The weighted rankings vary by the stage of the game, which is itself dependent on the board layout. So, for example, the game stage is deemed to be “early” for a given color until that color has been blocked from playing in a region of the board by the placement of other tiles. Before that point, plays that move toward the center of the board using large tiles are ranked the highest. The heuristics could be more dynamically determined based on a formalized concept of strategy, but for now, they are just tuned based on game stage.

I use the rankings in much the same way I think people play:

- Is this location a good place to play? I sort the possible play locations like you would scan the board.

- At each of these locations from best-to-worst, what tiles can I play, and which are the best plays at the location?

Two criteria I use to establish the difficulty of play are: 1) how many play locations to consider and 2) how many tiles to consider at each location. I collect a set of these locations, and when only considering the heuristics, I choose the one that ranks the highest according to the game stage. This works pretty well up to a point. In fact, the lowest difficulty levels in Polyominoes 1.1 just use heuristics to play against you. Unfortunately, though, the ranking is not very effective when it comes to “thinking ahead” – gauging the impact of a game play choice on subsequent game play, except in very gross terms.

To be competitive against expert human players, I needed something more.

Monte Carlo Tree Search to the Rescue

While exploring the possibility of using machine learning techniques in Polyominoes, I discovered that Alpha Go, Google’s human-beating computer program for playing the board game Go, used a combination of Monte Carlo tree search (MCTS) and machine learning. The machine learning is kind of the icing on the cake that makes it able to compete with Go masters. But, the MCTS algorithm is quite good in and of itself and can be used in all kinds of game play that involves uncertainty, such as real-time strategy games like StarCraft and even in selecting courses in competitive sailboat racing. It has the advantage of being able to be resource-bound – need better answers, give it more time and resources to work. It can also be parallelized, and given the power of mobile devices today, this could be a big advantage. I found this paper to be the best to understand the MCTS algorithm itself, and this paper to best to understand parallelizing of it.

In essence, starting from an initial set of possible game plays (or nodes) being evaluated, MCTS plays random moves to game completion, tracking who wins and loses for each one. Once all the initial set of nodes have been played against, the algorithm injects a degree of “exploration” into the node selection from which to play randomly. After some limited set of these random games, the node that won the most is deemed to be the “best play”. In Polyominoes terms, I select the initial set of plays based on heuristics, and then I use the parallelized MCTS algorithm to determine which is the best play. As you might expect, the best play often turns out to be the highest ranked play according to the heuristics; however, when the algorithm identifies one of the other lower-ranked plays as being superior, it is generally right.

Some other considerations in implementation:

- I spent quite a while on performance optimization. If you’re going to be playing random moves to completion of a Polyominoes game, you need to do it pretty darned quickly. I improved performance in terms of number of games per second that could be played by a factor of more than 20 by the time I stopped optimizing.

- Parallelization of MCTS gained me a factor of approximately 2 on performance and shows that it is working quite well across available cores.

- It might seem like it when you play the game, but your opponents are not ganging up on you as the sole human player. Each player considers the others to be opponents, so the algorithm is just as happy to block your plays and invade your territory as anyone else.

- Wow, iPhone speeds vary quite a lot! Check out the differences below. I realized that I wanted the difficulty levels to be the same across devices – you don’t want to pick up your old iPad and find you can beat the game, but you get walloped on your iPhone 11 Pro. So, this means I needed to limit the MCTS tree traversals to something that works on an iPhone 6S reasonably well. In the end, I think I found a pretty good balance so that high levels of difficulty could be achieved with reasonable performance (not to mention battery drain!).

| Model | Single Core | Multicore | Factor |

| iPhone 11 Pro | 1330 | 3429 | 3.46 |

| iPhone XS | 1109 | 2716 | 2.74 |

| iPhone 8 | 921 | 2244 | 2.26 |

| iPhone 7 | 743 | 1319 | 1.33 |

| iPhone 6s | 542 | 991 | 1.0 |

How Good Is it?

You will have to judge for yourself! One of my goals – and a particularly difficult problem – was to establish proper settings for the specific levels of difficulty. I wanted the free/out-of-box level to be reasonably challenging for beginners. I didn’t want it to be so easy that they could win every time with no experience, but I didn’t want it to be so hard that they became frustrated.

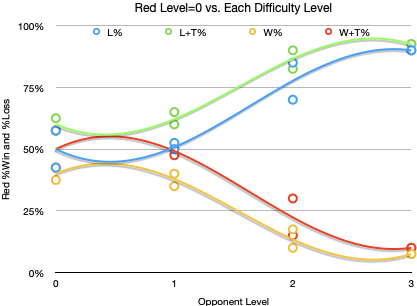

For testing purposes, since I had implemented the ability to play at different difficulty levels, I could now set the game up to play against itself. I did this by setting red to one level, and the opponents to play at a different level, tracking wins and losses across 40 games. Logically, when red (the user) is playing at the lowest difficulty level against opponents at the higher difficulty levels, red should mostly lose. Here are the results for a test run with nearly the final settings for version 1.1.

The X axis is the difficulty level for the opponents, from lowest to highest as red is kept at the lowest level, while the Y axis shows the % of red wins and losses against opponents. Polyominoes injects randomness in part of the game play to keep things interesting, so there are multiple results at each level of difficulty and curves fit to the data. When opponents are at the highest difficulty level, they can beat red at the lowest level almost all the time. What about if level 1 plays against level 2 and level 2 plays against level 3?

Here you can see that each level of difficulty is harder than the level below it.

The Tip of the Iceberg

That was a lot of work behind the scenes! In the end, this slider to select a difficulty level is all you see above the user interface sea level. Spare a thought for the rest of the iceberg as you drag the slider from Basic to Master 😅.

This is super interesting, while I have been playing a lot with Alpha Beta Miminax i has never heard of Monte Carlo Tree Search. Let me read the documents you are pointing at.

LikeLike

awesome game and super interesting write-up

LikeLike

I thought about this more and was wondering if you treat the game as one vs 3 or if the computer plays for the benefit of three different players

LikeLike

No, the computer plays with the other three players as opponents. I thought about doing that as a way to up the difficulty, but it didn’t seem fair 🙂

LikeLike

I love this game. A lot.

It’s funny how I played a while, got better, and intuitively became able to win on master level the majority of the time. Then I stopped and started thinking about different strategies, and in implementing, I think I’ve now become a worse player.

I would like to see a more complicated version of this game; larger board, bigger tiles, and maybe another rule or two. I feel like it might improve what I see is the only weakness of the game, related to the “ganging up”, by improving the players ability to overcome situations where they are cut-off by other players, and not feel like giving up because they likely have no chance of winning.

One bug; you can drop one of your tiles into another players when you are looking at theirs, and lose it. And a few random others.

I love the patterns made by the game, I’ve been doing screen captures on every board that I win.

LikeLiked by 1 person

Thanks very much for the kind words!

LikeLike